Ghost in the Codex Machine

How a release-only pre-main change made CUDA/MKL tool calls quietly fall back to slow paths.

Executive Summary

The symptom wasn't a crash. It was worse: "Codex feels slow" in certain real developer environments.

In release builds, a pre-main hardening routine executed before main() and stripped LD_* / DYLD_* environment variables. For a subset of users (CUDA, Conda/MKL, HPC module stacks, custom library layouts), that meant critical dynamic libraries could no longer be discovered inside Codex tool subprocesses. The downstream effect was dramatic but quiet: BLAS fell back to slow implementations; GPU workflows fell back to CPU; and engineers lost time chasing the wrong layer because nothing obviously "failed."

I was able to trace the behavior back to the introducing change, reduce it to a minimal reproduction + representative measurements, and open an upstream issue that maintainers could validate quickly. The fix shipped and is called out in the rust-v0.80.0 release notes with attribution.

- "It works in my terminal, but it's slow inside tool calls."

- No obvious error messages; the system just quietly falls back.

- Only reproduces in release builds and only for certain environment layouts.

- The smoking gun: environment variables differ inside subprocesses vs the user's shell.

- Tool correctness: Agents rely on subprocesses behaving like the user's environment.

- Evaluation stability: Silent fallbacks create noisy perf profiles and flaky "it feels slow" reports.

- Trust: If the substrate silently rewrites execution, higher-level behavior becomes harder to explain and debug.

Related Context

Timeline

PR #4521 merges (process hardening executes pre-main in CLI release builds)

First affected release ships (rust-v0.43.0)

Earlier "Ghosts in the Codex Machine" investigation published (useful context; this regression still remained)

I open issue #8945 with root cause + reproduction + benchmarks

Fix merged in PR #8951

Fix shipped in rust-v0.80.0 (release notes call-out)

The Problem

What Users Experienced

When Codex runs tools (Python, Node, build systems, CLIs), it does so by spawning subprocesses. For dev workflows, the correct baseline is simple: subprocesses should inherit the developer's environment unless a specific policy says otherwise.

When LD_LIBRARY_PATH / DYLD_LIBRARY_PATH disappears, the failure mode is often not a clean error. Instead, the dynamic linker "finds something else," and performance collapses:

- BLAS libraries fall back to unaccelerated implementations.

- CUDA tooling may fall back to CPU execution.

- Some enterprise/custom libraries fail to load entirely.

Root Cause

Why This Was a "Ghost"

The stripping happened before main() and before most instrumentation/logging was initialized:

- It was implemented as a pre-main constructor in release builds (

#[ctor::ctor]-style behavior). - It was silent by default (no warning when variables were removed).

- It only reproduced on specific environment layouts (non-RPATH installs, legacy Conda, HPC module stacks, custom vendor libraries).

- Users could see

LD_LIBRARY_PATHset correctly in their shell, but insidecodex execit was empty.

Evidence and Reproduction

How I Proved It

I approached this like a systems regression: reduce the behavior to a small, testable claim and then measure the consequences.

The core claim to test is simple: tool subprocesses should inherit the user's environment unless explicitly documented otherwise.

# Outside the agent/tool runner (baseline)

python -c "import os; print('LD_LIBRARY_PATH=', os.environ.get('LD_LIBRARY_PATH') or ''); print('DYLD_LIBRARY_PATH=', os.environ.get('DYLD_LIBRARY_PATH') or '')"

# Inside the tool runner (should match baseline)

codex exec -- python -c "import os; print('LD_LIBRARY_PATH=', os.environ.get('LD_LIBRARY_PATH') or ''); print('DYLD_LIBRARY_PATH=', os.environ.get('DYLD_LIBRARY_PATH') or '')"If the inside value is empty (or different), downstream tooling can silently pick different dynamic libraries and fall back to slower paths.

- Correlated the introducing change with reports consistent with "environment disappeared" behavior.

- Validated env var inheritance behavior directly inside subprocess tool calls.

- Used a small BLAS/CUDA-sensitive workload to quantify the performance impact of a library discovery fallback.

- Documented a minimal reproduction + representative measurements so maintainers could validate quickly.

The Fix

What Shipped Upstream

Security hardening is valuable, but in a developer CLI it must not silently rewrite the user's execution environment.

Stripping LD_* variables can reduce certain injection risks, but doing so by default in a dev-facing tool runner breaks correctness for legitimate workflows. I suggested the posture "opt-in maximum hardening." Upstream shipped a pragmatic equivalent:

- Remove pre-main hardening from the Codex CLI (restoring environment inheritance for subprocesses).

- Keep pre-main hardening in the responses API proxy where it is more appropriate.

- My contribution: isolate the root cause, produce a minimal repro, attach representative measurements, and write an issue maintainers could validate quickly.

- Upstream outcome: fix merged and shipped; behavior called out in release notes.

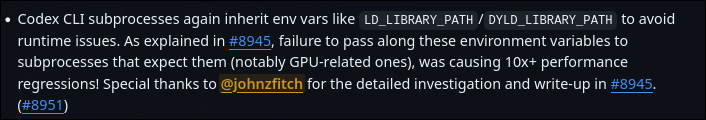

Release notes excerpt:

"Special thanks to @johnzfitch for the detailed investigation and write-up in #8945."

Measured Impact

Performance varies by workload and environment. The key point is the failure mode: stripping env vars can force slow, silent fallbacks.

Representative measurements from my verification:

| Workload | Before | After | Speedup |

|---|---|---|---|

| MKL/BLAS (repro harness) | ~2.71s | ~0.239s | 11.3x |

| CUDA workflows (library discovery / GPU fallback) | 100x-300x slower | restored | varies |

Why This Matters

Beyond One Bug

This is the kind of engineering failure that only shows up in real-world environments:

- Subprocess correctness is a product feature: tools must behave the same "inside Codex" as they do in the user's terminal.

- Security controls must be explicit, not surprising.

- Performance regressions can hide inside "correct" behavior when the system silently falls back.

- When the substrate is wrong, everything built on top of it pays the tax (tooling, orchestration, higher-level features).

What This Demonstrates

Recruiter-Relevant

- Deep systems debugging (pre-main execution, env inheritance, dynamic linking)

- Performance engineering with hard evidence

- Security tradeoff reasoning grounded in practical threat models

- High-quality upstream collaboration (clear issue, reproducible repro, verified fix, shipped release)

Why This Helped Shipping Velocity

Substrate bugs are expensive because they distort everything built on top:

- Tool calls become slower or flakier for affected environments.

- Performance investigations get noisy (it looks like "model slowness" or "network issues").

- Higher-level features that rely on predictable tool execution (orchestration, planning, collaboration) become harder to validate.

What I Would Add Next

Engineering Hygiene

- Integration tests asserting env var inheritance for subprocess execution

- A documented "secure mode" switch with explicit tradeoffs

- A debug command to dump the effective execution environment (for users and support)